Systems and Control Engineering News

“Listening” drone helps find victims needing rescue in disasters

As part of the ImPACT Tough Robotics Challenge Program1, an initiative of the Cabinet Office of Japan, a Japanese research group has developed the first system worldwide that is able to detect acoustic signals such as voices from victims needing rescue, even when they are difficult to find or are in places cameras cannot be used. This system was developed using three technological elements: a microphone array technology2 for the "robot ears," an interface for visualization of invisible sounds, and a microphone array that is easily connected to a drone, even in rainy weather.

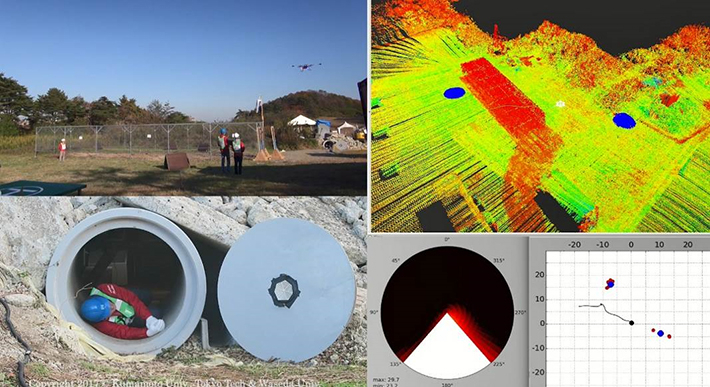

Figure 1. A simulated disaster victim in need of rescue is found among rubble (clay pipe) via audio (the voices and the whistles)

Blue circles on the map (top right) indicate the detected sound source locations.

Simulation experiment that "listening" dorone detected the voices - youtube

Background

"Robot audition" is a research area that was proposed to the world by Specially Appointed Professor Kazuhiro Nakadai of Tokyo Institute of Technology (Tokyo Tech) and Professor Hiroshi G. Okuno of Waseda University in 2000. Until then, robots had not been able to recognize voices unless a microphone was near a person's mouth. Research to construct "robot ears" began advancing under the idea that robots, like humans, should hear sound with their own ears. The entry barrier for this research area was high since it involves a combination of signal processing, robotics, and artificial intelligence. However, vigorous activities since its proposal, including the publication of open source software, culminated in its official registration as a research area in 2014 by the IEEE Robotics and Automation Society (RAS)![]() , the largest community for robot research.

, the largest community for robot research.

The three keys for making "robot ears" a reality are (1) sound source localization technology to estimate where sound is coming from, (2) sound source separation technology to extract the direction from which the sound originates, and (3) automatic speech recognition technology to recognize separated sounds from background noise, similar to how humans can recognize speech from across a noisy lot. The research team pursued techniques to implement these keys in real environments and in real-time. They developed the technology that, like the legendary Japanese Prince Shotoku3, could distinguish simultaneous speech from multiple people. They have, among other projects, demonstrated simultaneous meal ordering![]() by 11 people and created a robot game show host that can handle multiple contestants answering simultaneously.

by 11 people and created a robot game show host that can handle multiple contestants answering simultaneously.

Overview of Research Achievement

This technology is the result of extreme audition research performed as a research challenge from the Japanese Cabinet Office initiative ImPACT Tough Robotics Challenge and led by Program Manager Satoshi Tadokoro of Tohoku University. A system that can detect voices, mobile device sounds, and other sounds from disaster victims through the background noise of a drone has been developed to assist in faster victim recovery.

Assistant Professor Taro Suzuki of Waseda University provided the high-accuracy point cloud map data, an outcome of his research on high-performance GPS. The group performing the extreme audition research, Nakadai, Okuno, and Associate Professor Makoto Kumon of Kumamoto University, were central in developing this system, the first of its kind worldwide.

This system is made up of three main technical elements. The first is the microphone array technology based on the robot audition open source software HARK (HRI-JP Audition for Robots with Kyoto University)4. HARK has been updated every year since its 2008 release, and exceeded 120,000 total downloads as of December 2017. The software was extended to support embedded use while also maintaining its noise robustness. Researchers then embedded this version of HARK on a drone to decrease its weight and take advantage of high-speed data processing. They realized that microphone array processing could be performed inside a microphone array device attached to the drone—it is not necessary to send all of the captured signals to a base station wirelessly. The total data transmission volume was dramatically reduced to less than 1/100. This made it possible to detect sound sources even through the noise generated by the drone itself.

The second element is a three-dimensional sound source location estimation technology with map display. This made it possible to construct an easily understood visual user interface out of invisible sound sources.

The final element is an all-weather microphone array consisting of 16 microphones all connected by one cable for easy installation on a drone. This makes it possible to perform a search and rescue even in adverse weather.

Figure 2. Microphone array

The microphone array has with 16 microphones and can be connected by one cable (left). A drone equipped with a microphone array (right).

It is generally accepted that the survival probability is drastically reduced for victims that are not rescued within the first 72 hours after a disaster. Establishing technology for a swift search and rescue has been a pressing issue.

Most existing technologies using drones to search for disaster victims make use of cameras or similar devices. Not being able to use them when victims are difficult to find or are in areas where cameras are ineffective, such as when victims are buried or are in the dark, has been a major impediment in search and rescue operations. Since this technology detects sounds made by disaster victims, it may be able to mitigate such problems. It is expected to become promising tools for rescue teams in the near future as drones for finding victims needing rescue in disaster areas become widely available.

Future Development

The research group will continue to work toward improving the system to make it even easier to use and more robust by continuing to perform demonstrations and experiments in simulated disaster conditions. One goal is to add a functionality for classifying sound source types, instead of simply detecting them, so that relevant sound sources from victims can be distinguished from irrelevant sources. Another goal is to develop the system as a package of intelligent sensors that can be connected to various types of drones.

Comments from Satoshi Tadokoro, ImPACT Program Manager

ImPACT Tough Robotics Challenge is advancing its project R&D with the goal of promoting industrial innovation along with social innovation on disaster prevention by creating new business opportunities. We aim to do this by creating various tough, unfaltering technologies essential for robots in prevention of, emergency response to, and recovery from disasters, rescue, and humanitarian contributions.

Information from voices plays a vital role in finding victims needing rescue at disaster sites, but, in reality, surrounding noise and the sound of machinery make it very difficult to hear cries for help. When searching for survivors in collapsed houses, all noise is stopped for "silent time" to listen for voices. Manned helicopters and drones create especially loud noise from their propellers, and it had been entirely impossible until now to listen from them for voices calling from on the ground.

This research is a highly disruptive innovation combining three-dimensional location estimation of the sound source with mapping display by using an all-weather microphone array and robust voice signal processing technology. By equipping drones with this technology, it would become possible to identify voices of persons calling out from on the ground, or, under favorable circumstances, even from under rubble or indoors, and identify their location three-dimensionally. In the future, this technology is expected to lead to many instances of rescue in large-scale earthquake and flood disasters by being installed on drones and various rescue materials and equipment, making it possible to collect voice information leading to the discovery of victims needing rescue.

1 ImPACT Tough Robotics Challenge (TRC)

an R&D Program from the Japanese Cabinet Office's Impulsing Paradigm Change through Disruptive Technologies Program.

a technology that uses a microphone array to estimate the direction of sound or to isolate and extract specific sounds; can be effective even in noisy conditions.

a member of the imperial family of Japan in the seventh century. Legend has it that when ten people vying for him to hear their petitions all talked at once, he understood all the words uttered by each person and was able to give an appropriate reply to each.

the abbreviated name for Honda Research Institute Japan Audition for Robots with Kyoto University. It is open source software for robot audition developed by Honda Research Institute Japan Co., Ltd. (HRI-JP) and Kyoto University. "Hark" is a Middle English word for "listen."

Impulsing Paradigm Change through Disruptive Technologies Program

Program Manager: Satoshi Tadokoro

R&D Program: Tough Robotics Challenge

R&D Challenge: Search and identification of sound sources using microphone arrays installed on UAV

(R&D Manager: Kazuhiro Nakadai, Research period: 2014–2018)

- Press release "Listening" drone helps find victims needing rescue in disasters"

- Nakadai Laboratory(Japanese)

- Researcher Profile | Tokyo Tech STAR Search - Kazuhiro Nakadai

- Special Issue on Robot Audition Technologies, Journal of Robotics and Mechatronics(Vol.29 No.1)

- Latest Research News

Further Information

Specially Appointed Professor Kazuhiro Nakadai

School of Engineering, Tokyo Institute of Technology

E-mail nakadai@ra.sc.e.titech.ac.jp

Contact

Public Relations Section,

Tokyo Institute of Technology

E-mail media@jim.titech.ac.jp

Tel +81-3-5734-2975